Despite using common language, the Dungeons & Dragons rules feature such precise wording that a close reading answers most questions and foils many schemes to break the game. You can tell that the designers dreamed up plenty of min-maxing exploits, and then engineered text that prevented any shenanigans.

Sometimes the implications of the game’s precise phrasing take experience to spot.

For example, the description for alchemist’s fire says, “Make a ranged attack against a creature or object, treating the alchemist’s fire as an improvised weapon.” That text includes plenty to unpack. Alchemist’s fire is treated as an improvised weapon, so unless you’re a tavern brawler, you don’t add your proficiency bonus to attack. Because the throw counts as a ranged attack, you add your Dexterity bonus to your attack roll. Most players miss the next implication: Ranged attacks add your Dexterity bonus to the damage roll. The specific rule for alchemist’s fire changes the general rule for when a ranged attack inflicts damage. “On a hit, the target takes 1d4 fire damage at the start of each of its turns.” As with any other damage bonus, the one for Dexterity only adds to the attack once.

(For another example of how a close reading of the rules differs from the common interpretation, check out the strict method for rolling damage from a magic missile.)

(For another example of how a close reading of the rules differs from the common interpretation, check out the strict method for rolling damage from a magic missile.)

As I learned the D&D rules, I noticed phrases that once seemed innocuous, but that now reveal importance.

For example, consider the phrase “that you can see” in spell descriptions. Many spells require the caster to see the target of an effect. Invisibility rates as the game’s most potent defensive spell because so much magic requires sight for targeting. Sometimes the phrase “that you can see” turns against the players. Spirit Guardians lets casters spare any number of creatures they can see from the spell’s effect. Any invisible or otherwise out-of-sight allies must suffer the guardians’ effects.

Many monsters can cast spells “requiring no material components.” This enables a flameskull to cast Fireball despite lacking pockets full of bat guano and sulfur. (Flameskulls also cast without somatic components—an essential accommodation for their lack of hands.)

Monsters able to cast spells “requiring no components” gain a significant advantage: These creatures can cast spells without being interrupted by a Counterspell. “To be perceptible, the casting of a spell must involve a verbal, somatic, or material component.” With no components, no one notices the casting until it finishes.

The monsters able to cast without components mainly fall into two categories:

• psionic creatures like githyanki and mind flayers

• constructs

Many character features allow extra attacks “when you use the Attack action,” which creates a limitation that often goes unnoticed. For example, a monk’s extra unarmed strike requires an Attack action, so a monk cannot just take the Dash or Dodge action and then use a Bonus action to get some licks in. This same phrase prevents two-weapon rangers from casting a spell, and then making an attack with their off-hand weapon.

Most extra attacks delivered “when you use the Attack action” cost a Bonus action, but the barbarian’s Form of the Beast feature lets you make extra claw attacks as part of your Attack action. This enables such barbarians to rage and to still make that extra attack.

The D&D rules overload the terms “attack,” “melee,” and “ranged,” giving them different meanings in different contexts. That can fuel confusion. The Attack action usually includes an attack (unless you choose to grapple). But sometimes you can make an attack with a Bonus action, often “when you use the Attack action.” Spellcasters can take the Cast a Spell action, and then make a spell attack with something like a Fire Bolt. Spells like Booming Blade and Green-Flame Blade have you to make a melee attack (and not a spell attack) with a weapon as part of the Cast a Spell action.

No wonder the 2nd edition of Pathfinder attempts to cut the fog by calling a single attack a strike.

“Melee” and “ranged” can describe types of weapons and types of attacks. Usually the weapons and attacks stay in their lanes, but when you hurl a melee weapon it crosses into oncoming traffic.

A melee weapon, such as a dagger or handaxe, remains a melee weapon even when you make a ranged attack by throwing it. Normally a ranged attack adds your Dexterity bonus to damage, but the thrown property can change that general rule. The thrown property says, “If the weapon is a melee weapon, you use the same ability modifier for that attack roll and damage roll that you would use for a melee attack with the weapon. If you throw a dagger, you can use either your Strength or your Dexterity, since the dagger has the finesse property.”

When used to make a ranged attack, melee weapons that lack the thrown property count as improvised weapons. They add your Dexterity bonus to the attack and damage rolls, and deal 1d4 damage.

If I were king of D&D, my edition would adopt “strike” for a single attack, and I would consider phrases like “close attack” and “distance attack” in place of the overworked “ranged” and “melee.”

Sometimes a close reading of the D&D rules leads to interpretations that might differ from what the designers first intended. Perhaps lead designer Jeremy Crawford got questions about sneak attack, reviewed the rules, and then thought, I didn’t mean that, but it still works.

Your rogue can use the sneak attack feature “once per turn,” but it’s not limited to your turn. During a round, rogues can sneak attack on their turn and again on someone else’s turn, typically when a foe provokes an opportunity attack.

For spells like Wall of Fire and Blade Barrier, the distinction between turns and rounds also becomes important. These spells deal damage the first time you enter their effect on a turn—anyone’s turn. This means that if a monster gets forced through a Wall of Fire on consecutive turns, they accumulate more damage in a round than if they had just stayed in the fire. I suppose you get used to the heat.

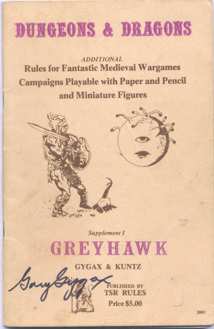

D&D’s designers seemed to think rising armor classes made more sense. The game rules stemmed from co-creator Gary Gygax’s

D&D’s designers seemed to think rising armor classes made more sense. The game rules stemmed from co-creator Gary Gygax’s