When I drafted my list of supreme character builds for Dungeons & Dragons, I originally included a section that asked, “You can play this, but should you?” The answer became this post, but why even ask?

In a comment, designer Teos “Alphastream” Abadia identified the supreme builds as enjoyable concepts, but “generally horrible at the table.” Although any character can fit the right game, some optimized builds reduce the fun at most tables. Teos writes, “For me, the biggest social contract item for players is to contain whatever optimization they cook up to reasonable and fun levels.”

D&D’s design aims to create a game where all a party’s characters get to contribute to the group’s success. When a single member of the group starts battles by one-shotting the monster with a huge burst of damage, or one character learns every skill to meet all challenges, then that character idles or overshadows the rest and makes the other players wonder why they showed up.

D&D’s design aims to create a game where all a party’s characters get to contribute to the group’s success. When a single member of the group starts battles by one-shotting the monster with a huge burst of damage, or one character learns every skill to meet all challenges, then that character idles or overshadows the rest and makes the other players wonder why they showed up.

Most skilled

DM’s guild designer Andrew Bishkinskyi singles out one optimization to skip. “The most skilled character is made to do everything, and exists by design to exclude others from play, which I don’t want.”

Early editions of D&D embraced this kind of specialization. Thieves started as the only class with any capabilities resembling skills, but rated as nearly useless in a fight. Nowadays, D&D’s class designs aim give every class ways to contribute through all the game’s three pillars of exploration, combat, and roleplaying interaction.

Most damaging

Combat makes a big part of most D&D games, so characters optimized for extreme damage tend to prove troublesome. I’ve run public tables where newer players dealing single-digit damage would follow turns where optimized characters routinely dealt 50-some points. I saw the new folks trade discouraged looks as they realized their contributions hardly mattered. DM Thomas Christy has hosted as many online D&D games for strangers as anyone. He says, “I have actually had players complain in game and out about how it seemed like they did not need to be there.” In a Todd Talks episode, Jen Kretchmer tells about asking a player to rebuild a combat-optimized character. “The character was a nightmare of doing way more damage off the top, and no one else could get a hit in.” See Sharpshooters Are the Worst Thing in D&D.

D&D’s strongest high-damage builds make ranged attacks from a distance. Such builds can leave the rest of the party to bear the monsters’ attacks. Teos Abadia writes, “Even if we don’t have character deaths or a TPK, a ranged character can create a frustrating situation for the other characters, who find themselves relentlessly beaten up, constantly targeted by saving throws, and harried by environmental and terrain damage. Over the course of a campaign, this can be tough for the party. Players may not even realize the cause. They simply find play frustrating and feel picked on. If the ranged player keeps saying, ‘hey, I didn’t even take any damage—again!’ the rest of the party might start to realize why.”

If you, like everyone, enjoy dealing maximum damage, I recommend a character powered by the Great Weapon Master and Polearm Master feats. See How to Build a D&D Polearm Master That Might Be Better Than a Sharpshooter. If you favor a ranged attacker, the strongest builds combine Sharpshooter, Crossbow Expert, and an Extra Attack feature. In a typical game, pick two.

Biggest damage novas

A few D&D players welcome characters capable of starting a fight with a huge burst of damage for an unexpected reason: These gamers find D&D’s combat pillar tiresome. By bringing a fight to an immediate end, a nova just brings the session back to their fun. Perhaps these games need a better approach to combat, or even a switch to a different game.

In groups more interested in roleplaying and exploration, players might not mind letting an optimized character showboat during the battles. Or perhaps others in the group feel content in roles other than damage dealing. Perhaps the bard and wizard both enjoy their versatility, the druid likes turning into a bat and scouting, and nobody minds letting you finish encounters at the top of round 1.

But most gamers enjoy a mix of the D&D’s three pillars. For these players, characters designed to start fights with maximum damage prove problematic because when they work, no one else participates. “The issue is that even if those characters don’t completely trivialize an encounter, they can reduce the fun of other players by taking a disproportionate amount of the spotlight,” writes @UncannyPally.

You can’t blame the players aiming for these builds. The occasional nova can create memorable moments.

“It’s only fun the first few times a character charges in and essentially one-shots the boss before you get to do anything,” writes @pocketfell. “And of course, upping the hp of the monsters just means that when the mega-damage PC doesn’t get lucky, it’s a slog through four times the usual number of hp.”

I suspect that D&D class features that power damage spikes steer the game in the wrong direction. However, I respect D&D’s designers and they seem to welcome such features. For example, paladins can smite multiple times per turn. In more recent designs, rangers with the Gloom Stalker archetype begin fights with an extra attack plus extra damage. The grave domain cleric’s Path to the Grave feature sets up one shots by making creatures vulnerable to the next attack.

Surely, the designers defending such features would cite 2 points:

- Players relish the occasional nova. They can feel like an exploit that breaks the game, delivering a quick win.

- Some spells shut down an encounter as well as massive weapon damage. Fair’s fair.

I argue that encounter-breaking spells rate as problematic too, but D&D traditionally limits such spells to a few spellcasting classes, often at higher levels and only once per day.

Highest AC

I accept that as a DM controlling the monsters, I will almost always lose. A defeat for my team evil counts as a win for the table, so I welcome the loss. But I must confess something: For my fun, I like the monsters to get some licks in. Is that so wrong? Under suggestion and zone of truth, I suspect other DMs would echo the same admission. Some gamers even float the courageous suggestion that DMs deserve fun like the players.

A character with an untouchable AC doesn’t rob the spotlight from other players, but for DMs, such characters become tiresome. If you back up a maximum AC with, say, a class able to cast shield and block those rare hits, then your DM might not show disappointment when you miss game night.

To be fair, players who sell out for maximum defense wind up with few other strengths. These players enjoy their chance to shine at the end of every fight when they crow about not taking damage—again! I’ve learned to accept their source of bliss and welcome their characters. They may soak attacks, turning claw, claw, bite into useless flailing, but I can always add more attacks to go around.

Toughest

In theory, tough characters should trigger the same annoyance as untouchable characters, but the barbarians and Circle of the Moon druids actually suffer hits, so their durability feels different.

In tactically-minded parties, tough characters and characters with high AC fill a role by preventing monsters from reaching more fragile characters. If your group favors that play style, your DM surely dials up the opposition past very strong and also pairs smart foes with clever strategies. Optimized characters of all sorts often fit that style of play.

Fastest

Nobody minds a fast character. I love playing monks who speed around the battlefield stunning everything in their path. However, those stun attacks certainly bring less acclaim. See How to Build a D&D Monk So Good That DMs Want to Cheat.

Most healing

If you play the healer and miss game night, everyone feels disappointed. ’Nuff said.

Related:

• If D&D Play Styles Could Talk, the One I Hate Would Say, “I Won D&D for You. You’re Welcome.”

• 10 Ways to Build a Character That Will Earn the Love of Your Party

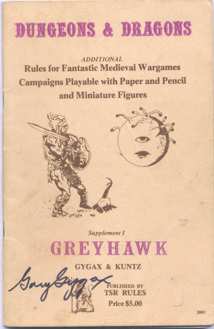

D&D’s designers seemed to think rising armor classes made more sense. The game rules stemmed from co-creator Gary Gygax’s

D&D’s designers seemed to think rising armor classes made more sense. The game rules stemmed from co-creator Gary Gygax’s